Xinhua News Agency, Beijing, July 11th-the State Council Press Office released a white paper on the protection of marine ecological environment in China on July 11th. The full text is as follows:

Protection of marine ecological environment in China

(July 2024)

People’s Republic of China (PRC)

the State Council Information Office

catalogue

foreword

First, build a harmonious marine ecological environment

II. Promoting the protection of marine ecological environment as a whole

(1) Planning guidance

(2) Protection according to law

(3) System guarantee

Third, systematically manage the marine ecological environment

(1) Comprehensive management of key sea areas

(2) Collaborative control of land-based pollution

(3) Precise prevention and control of marine pollution

(4) Strive to build a beautiful bay.

Fourth, scientifically carry out marine ecological protection and restoration

(1) Building a solid marine ecological barrier

(2) Implementing marine ecological restoration.

(3) Strictly observe the marine disaster defense line.

(four) to carry out the demonstration of the establishment of the island of the United States.

(5) Building an ecological coastal zone

Five, strengthen the supervision and management of marine ecological environment

(a) the implementation of space use control and environmental zoning control.

(two) to carry out monitoring and investigation.

(3) Strict supervision and law enforcement

(D) to strengthen the assessment of inspectors

Sixth, improve the level of marine green and low-carbon development

(1) Promoting the efficient utilization of marine resources.

(B) thick planting green background of marine economy

(C) Explore the realization of the value of ecological products

(4) Carry out green and low-carbon national action.

Seven, all-round international cooperation in marine ecological environment protection.

(1) Actively fulfill the contract and participate in global governance.

(2) Expanding the "circle of friends" of maritime cooperation.

(3) Expand cooperation in deep-sea polar scientific research

(D) extensive training in foreign aid.

Concluding remarks

foreword

The ocean accounts for about 71% of the earth’s surface area. It is the cradle of life and the source of human civilization. The marine ecological environment is related to the ecological balance of the earth and the rational utilization of resources, to the sustainable development of human civilization, and to the reality and future of the community of marine destiny. Protecting the marine ecological environment plays an important role in safeguarding the national ecological security, promoting the sustainable development of the ocean and realizing the harmonious coexistence of human and sea. Firmly protecting and improving the marine environment and protecting and sustainably utilizing marine resources are the common responsibility and mission of all countries.

China is a staunch promoter and active actor of marine ecological environment protection. Protecting the marine ecological environment is related to the beautiful China and the construction of a maritime power. Over the years, China has adhered to ecological priority and systematic governance, coordinated the relationship between development and protection, supported high-quality development with high-level protection, and strived to build a harmonious marine ecological environment.

Since the 18th National Congress of the Communist Party of China, the General Secretary of the Supreme Leader has made a series of important expositions on the protection of marine ecological environment, emphasizing "to care for the ocean like life". Under the guidance of the supreme leader’s ecological civilization thought, China has carried out a series of fundamental, pioneering and long-term work to adapt to the new situation, new tasks and new requirements of marine ecological environment protection, which has promoted historic, turning and overall changes in marine ecological environment protection. Through unremitting efforts, the overall quality of marine ecological environment in China has been improved, the service function of some sea areas’ ecosystems has been significantly improved, marine resources have been developed and utilized in an orderly manner, the marine ecological environment governance system has been continuously improved, and the people’s sense of access, happiness and security to the sea and the sea have been significantly improved, and remarkable results have been achieved in marine ecological environment protection. China has actively promoted international cooperation in marine environmental protection, earnestly fulfilled its obligations under international conventions, put forward China’s plan for global marine environmental governance, and contributed to China’s strength, demonstrating the actions and responsibilities of a responsible big country.

In order to introduce the concept, practice and effect of marine ecological environment protection in China, enhance the international community’s understanding and understanding of marine ecological environment protection in China, and promote international cooperation in marine ecological environment protection, this white paper is hereby issued.

First, build a harmonious marine ecological environment

The marine industry is related to the survival and development of the nation and the rise and fall of the country. Protecting the marine ecological environment is related to the modernization of harmonious coexistence between man and nature. China fully implements the new development concept, attaches great importance to the protection of marine ecological environment, based on the basic national conditions and development stages, continuously deepens the understanding of marine ecological environment protection, continuously improves the marine ecological environment protection system, and accelerates the construction of marine ecological civilization.

After the founding of New China, with the continuous development of marine industry, China attaches great importance to the marine ecological environment and pays close attention to its protection. After the establishment of the State Oceanic Administration in 1964, the marine ecological environment management system in China was gradually established. The marine environmental protection law was promulgated in 1982, which marked that the marine environmental protection in China entered the legal track. In 1999, the marine environmental protection law was revised to promote the transformation of marine environmental protection from focusing on pollution prevention to giving consideration to ecological protection. China has formulated China Marine Agenda 21, implemented the UN Agenda for Sustainable Development in 2030, and promoted the systematic and professional development of marine ecological environment protection. In 2023, the marine environmental protection law was revised again to realize the systematic transformation to land and sea planning and comprehensive management.

China, based on enhancing the coordination of land and sea pollution prevention and control and the integrity of ecological environment protection, has incorporated marine ecological environment protection into the national ecological environment protection system, gradually opened up land and sea, strengthened the overall coordination of land and sea ecological environment protection functions, and established and improved the marine ecological environment management system. By continuously strengthening the prevention and control of marine environmental pollution, actively carrying out marine ecological protection and restoration, and deeply fighting the tough battle of comprehensive management of key sea areas, the quality of marine environment in China has been greatly improved, the ecosystem service function of some sea areas has been significantly improved, and the process of orderly development and utilization of resources and green transformation of marine economy has been significantly accelerated.

China’s marine ecological environment protection develops through inheritance, innovates through exploration, and strives to build a harmonious marine ecological environment.

— — Adhere to respect for nature and ecological priority. Firmly establish the concept of respecting nature, adapting to nature and protecting nature, objectively understand the natural laws of marine ecosystems, and proceed from the succession and internal mechanism of marine ecosystems, strive to improve the self-regulation, self-purification and self-recovery capabilities of marine ecosystems, and enhance their stability and ecological service functions. Adhere to the bottom line thinking and ecological priority, incorporate the construction of marine ecological civilization into the overall layout of marine development, build a protective barrier for marine ecological environment, scientifically and rationally develop and utilize marine resources, and promote harmony between people and sea.

— — Adhere to integrated protection and systematic governance. The protection of marine ecological environment is a systematic project. China adheres to the concept of system, making overall plans, paying equal attention to development and protection, paying equal attention to pollution prevention and ecological restoration, and promoting marine ecological environment protection by land and sea. Adhere to the linkage of rivers and seas and the mutual assistance of mountains and seas, open up the upstream and downstream of onshore waters, land seas and river basins, build a cooperative mechanism of regional linkage and departmental coordination for protection, governance, supervision and law enforcement, and explore the establishment of a comprehensive governance system that integrates coastal areas, river basins and sea areas.

— — Adhere to the law and strict supervision. China protects the marine ecological environment with the strictest system and the strictest rule of law. Adhere to the rule of law, coordinate the revision of relevant laws and regulations, establish a legal system for marine ecological environment protection, and implement the most stringent marine ecological environment governance system. Strengthen the normalization, whole-process supervision and management of marine ecological environment zoning control, monitoring and investigation, supervision and law enforcement, assessment and supervision, give play to the role of the central ecological environmental protection inspector and the national natural resources inspector, strike hard, punish chaos severely, and severely crack down on acts that destroy the marine ecological environment.

— — Adhere to innovation-driven and technology-led. China adheres to innovation-driven development, strengthens the innovation of marine ecological environment protection technology system, monitoring and evaluation, system and mechanism, makes scientific decisions and precise policies, and promotes the digital and intelligent transformation and upgrading of marine ecological environment protection. Implement the strategy of "invigorating the sea through science and technology", give full play to the leading role of science and technology in marine ecological environment protection, strive to break through the bottleneck of science and technology that restricts marine ecological environment protection and high-quality development of marine economy, and use various means of land, sea, air and sky to improve the monitoring, governance, supervision, emergency response capability and technical level of marine ecological environment.

— — Adhere to green transformation and low-carbon development. Blue sea and silver beach are also green mountains and green hills, and Jinshan Yinshan. China adheres to the concept of green development, explores the path of marine green development, promotes the transformation of marine development mode to recycling, vigorously develops green industries such as eco-tourism and eco-fishery, and constantly expands the path of realizing the value of eco-products, so as to promote the high-quality economic development and create a high-quality life in coastal areas with high-level protection of marine ecological environment. Based on the strategic goal of "double carbon", with pollution reduction and carbon reduction as the starting point, we will jointly promote foreign exchange increase and emission reduction in the marine field, develop new green and low-carbon economic formats such as marine pastures and offshore wind power, promote the green and low-carbon transformation of the marine industry, and accelerate the green and low-carbon sustainable development of the marine industry.

— — Adhere to the government-led and pluralistic governance. Adhere to the government’s leading position in marine ecological environment protection, play a key role in system design, scientific planning, regulatory services, risk prevention, etc., and establish a working mechanism for marine ecological environment protection with central planning, overall responsibility of provinces, and implementation by cities and counties. Activate business entities, transaction elements and social capital to participate in marine ecological environment protection, create a sustainable model of marine environmental protection and ecological restoration, and strive to build a modern marine ecological environment governance system with the leadership of party committees, government-led, corporate entities, social organizations and the public.

— — Adhere to the supremacy of the people and the participation of the whole people. China adheres to the principle of eco-benefiting people, eco-benefiting people and eco-serving the people, constantly meeting people’s new expectations for a good ecological environment, effectively solving outstanding marine ecological environment problems, constantly improving the quality of being close to the sea, striving to make people eat green, safe and assured seafood, enjoy the blue sky and clean beaches, and constantly improve people’s sense of being close to the sea, happiness and security. Adhere to the people, rely on the people, promote the harmonious coexistence of marine ecological culture, form a consensus and action consciousness of the whole people to actively participate in marine ecological environmental protection, and create a new pattern of co-construction, co-governance and sharing of marine ecological environmental protection.

— — Adhere to the world, win-win cooperation. Adhering to the concept of a community of marine destiny, China, with an open mind, an inclusive mentality and a broad perspective, shares weal and woe with people all over the world to jointly meet the challenges of marine ecological environment, resolutely safeguard the common interests of mankind, and leave a blue sea and blue sky for future generations. Adhere to the principles of mutual trust, mutual assistance and mutual benefit, promote international cooperation in marine ecological environment protection, share the fruitful results of protection and development, and contribute China’s wisdom and China’s strength to jointly build a clean and beautiful ocean.

II. Promoting the protection of marine ecological environment as a whole

China attaches great importance to the construction of marine ecological civilization and protection of marine ecological environment, strengthens top-level design, adheres to planning guidance, strengthens overall coordination, establishes and improves laws, regulations and institutional systems, and constantly improves institutional mechanisms to promote the smooth development of marine ecological environment protection.

(1) Planning guidance

China, based on the new situation, new tasks and new requirements of marine ecological environment protection, based on the national economic and social development plan and linked with the national spatial planning, has formulated special plans for marine ecological environment protection and related field plans to lead the work of marine ecological environment protection.

Systematic planning of marine ecological environment protection. Relevant planning of marine ecological environment protection is the basic basis for guiding the implementation of marine ecological environment protection and promoting the construction of marine ecological civilization. The national economic and social development plan makes strategic arrangements for marine ecological environment protection. The national land and space planning has made overall arrangements for the construction of a harmonious marine spatial pattern between land and sea, and made spatial strategic guidance for the protection of marine ecological environment in the sea areas under its jurisdiction. In recent years, China issued the "14th Five-Year Plan" to explore the establishment of a new system of "national, provincial, municipal and bay" hierarchical governance, promote the formation of a new pattern of comprehensive governance based on the bay as the basic unit and action carrier, and lead the marine ecological environment protection work in the new era; The special plan for scientific and technological innovation in the field of ecological environment during the 14th Five-Year Plan, the regulatory plan for ecological protection during the 14th Five-Year Plan, the monitoring plan for ecological environment during the 14th Five-Year Plan and the national plan for marine dumping areas (2021-2025) were issued to guide the scientific and technological innovation of marine ecological environment protection, the supervision of marine ecological protection and restoration, the monitoring and evaluation of marine ecological environment, and the management of marine dumping, providing solid support for comprehensively strengthening marine ecological environment protection.

Spatial layout of marine development and protection adhering to the principle of ecological priority. Marine space is the basic carrier for protecting and restoring marine ecosystem, making overall arrangements for marine development and utilization activities, and implementing various tasks of marine governance. Marine space planning is an important tool for making overall arrangements for various marine space development and protection activities. Various types of spatial planning, such as National Marine Functional Zoning, National Marine Major Functional Zone Planning and National Island Protection Planning, have been issued successively, which have played an active role in the classified protection and rational utilization of sea areas and islands at different stages. After the overall deployment of "multi-regulation integration" was made in 2018, several opinions on establishing a land spatial planning system and supervising its implementation were issued, the Outline of National Land Spatial Planning (2021-2035) was issued, and the Spatial Planning of Coastal Zone and Coastal Waters (2021-2035) was compiled, and land spatial planning at all levels in coastal areas was implemented one after another, forming a marine spatial planning with land and sea as a whole.

Promote protection and restoration in an orderly manner. Under the guidance of the spatiality of land and space planning, in order to plan and design the protection and restoration of important ecosystems in coastal areas, China formulated and implemented the Construction Plan for Major Projects of Coastal Ecological Protection and Restoration (2021-2035) for the first time, focusing on improving the quality and stability of coastal ecosystems and enhancing coastal ecosystem services, forming an overall pattern of major projects of coastal ecological protection and restoration with "one belt, two corridors, six districts and multiple points"; In order to improve the diversity, stability and sustainability of marine ecosystems, the 14th Five-Year Plan for Marine Ecological Protection and Restoration, the Special Action Plan for Mangroves Protection and Restoration (2020-2025) and the Special Action Plan for Spartina alterniflora Prevention and Control (2022-2025) have been issued, with scientific and rational layout, local conditions, zoning and classification policies, and overall promotion of the 14th Five-Year Plan.

(2) Protection according to law

Relying on the rule of law is the fundamental follow-up of marine ecological environment protection. China has improved the system of laws and regulations on marine ecological environment protection, strengthened the administration of justice, carried out popularization of laws, formed a good atmosphere for the whole society to respect, learn, abide by and use the law, and promoted the operation of marine ecological environment protection under the rule of law.

Establish and improve the marine ecological environment protection laws and regulations system. China attaches great importance to the legislation of marine ecological environment protection, and has successively promulgated a series of relevant laws and regulations. In 1982, the Marine Environmental Protection Law was promulgated. After two revisions and three revisions, it constantly adapts to the new situation and keeps pace with the times. It is a comprehensive law in the field of national marine environmental protection. Around the marine environmental protection law, seven administrative regulations, including regulations on marine dumping, more than 10 departmental regulations and more than 100 normative documents have been formulated, more than 200 technical standards and norms have been issued, and the legal and regulatory system for marine ecological environmental protection has been basically established. In addition to the special marine environmental protection law, other important laws have also made relevant provisions, such as the Law on the Administration of Sea Area Use and the Law on Island Protection, which stipulate the sustainable utilization, protection and improvement of the ecological environment of sea islands; the Law on Wetland Protection and the Law on Fisheries stipulate the protection of coastal wetlands and fishery resources; and the Law on the Protection of the Yangtze River and the Law on the Protection of the Yellow River stipulate the planning, monitoring and restoration of estuaries. Coastal provinces (autonomous regions and municipalities) have promulgated and implemented local laws and regulations or government regulations on marine ecological environment protection, and Guangxi, Hainan and other places have specially legislated to protect coastal beaches and rare animal and plant resources.

Do a good job in judicial protection of marine ecological environment. The court has actively explored the practice of judicial protection of the marine environment, and since 1984, it has tried more than 5,000 civil disputes over the marine environment. Since 2015, the Maritime Court has concluded more than 1,000 administrative litigation cases involving the marine environment, and explored criminal cases such as pollution of the marine environment, illegal sand mining at sea and illegal harvesting of precious and endangered aquatic wild animals. On the basis of summing up practical experience, China has gradually formed a "three-in-one" marine environmental protection judicial system of criminal, civil and administrative litigation, as well as a marine environmental public interest litigation system with China characteristics, thus building a solid judicial defense line for marine ecological environmental protection.

Carry out the popularization of marine ecological environment protection. Through holding press conferences, holding lectures and training, media publicity, knowledge contests, distributing publicity materials and other forms, laws and regulations related to sea areas, islands, marine environmental protection, and management of fishing boats at sea were publicized and popularized. In some areas, laws and regulations related to marine ecological environmental protection were innovated and popularized through VR (virtual reality) experience, interactive games, micro movies and other forms, with remarkable results. Increase publicity to coastal areas, marine-related enterprises and the public, urge local governments to protect and use the sea area scientifically and rationally, urge marine-related enterprises to perform their responsibilities, guide the public to raise their awareness of marine laws and regulations, and let more marine-related units and people understand, protect and care for the ocean.

(3) System guarantee

Establish a series of marine ecological environment protection systems, basically realize the overall connection between land and marine management systems and mechanisms, gradually improve the marine ecological environment protection management system, and continuously improve the efficiency of marine ecological environment governance.

Establish the "four beams and eight pillars" of the protection system. China attaches great importance to using the system to protect the marine ecological environment, regulates the development and utilization of marine resources, and establishes the "four pillars and eight pillars" of the marine ecological environment protection system in combination with practice and according to law. In terms of pollution prevention and control, establish systems such as filing sewage outlets into the sea, environmental assessment approval, marine dumping permission, and emergency response; In the aspect of ecological protection and restoration, the system of marine ecological protection red line, nature reserve and natural coastline control should be established; In terms of supervision and management, the system of land space use control, ecological environment zoning control, central ecological environment protection inspector, national natural resources inspector, target responsibility system, assessment, monitoring and investigation is established; In terms of green development, the system of marine ecological protection compensation, fishing quota and fishing license, and paid use of sea areas should be established.

Form a management system of "departmental coordination and up-and-down linkage". After years of construction and development, the management system of marine ecological environment protection in China has experienced a development process from scratch, from weak to strong. In 2018, the State Council’s institutional reform integrated the responsibility of marine environmental protection into the ecological environment department, and the responsibility of marine protection, restoration, development and utilization into the natural resources department. The departments of transportation, maritime affairs, fishery, forestry and grass, marine police and army participated in the marine ecological environment protection work together according to their respective functions, which opened up the land and sea, and enhanced the synergy of land and sea pollution prevention and control and the integrity of ecological environment protection. Set up ecological environment supervision institutions in the North Sea area of Haihe River Basin, the South China Sea area of Pearl River Basin and the East China Sea area of Taihu Lake Basin to undertake marine ecological environment supervision. Coastal provinces (autonomous regions and municipalities) undertake specific responsibilities for the ecological environment management in coastal waters, and implement key tasks, major projects and important measures to promote the protection and management of marine ecological environment. Over the years, China has formed a working mechanism of marine ecological environment protection with multi-sectoral coordination and linkage between the central and local governments, and initially established a comprehensive management system of coastal areas, river basins and sea areas.

Third, systematically manage the marine ecological environment

Adhere to the key attack and system management simultaneously, land and sea as a whole, river and sea linkage, carry out marine ecological environment management, and continuously improve the quality of marine ecological environment.

(1) Comprehensive management of key sea areas

The Bohai Sea, the Yangtze River Estuary-Hangzhou Bay, the Pearl River Estuary and other key sea areas are located in the strategic intersection of high-quality development along the coast of China, with developed economy, dense population, high intensity of marine development and utilization, obvious characteristics of regional marine ecological environment, and relatively concentrated and prominent problems. Therefore, it is very important to implement comprehensive management.

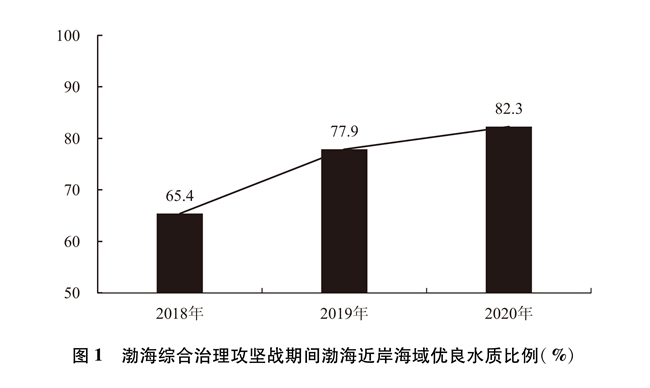

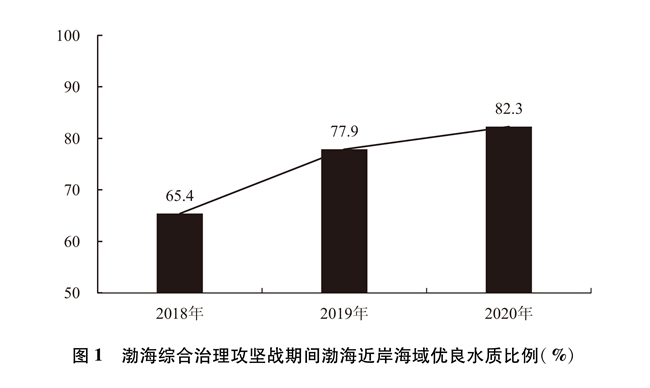

Fight hard to win the battle for comprehensive management of Bohai Sea. Bohai Sea is a semi-closed inland sea in China, with poor seawater exchange capacity and insufficient self-purification capacity. Since 2018, China has launched the first battle to tackle pollution prevention and control in the marine field, taking the comprehensive management of the Bohai Sea as one of the landmark battles in the "Thirteenth Five-Year Plan" campaign. According to the overall deployment of "planning the layout in one year, starting from the overall situation in two years, and achieving results at the beginning of three years", focusing on the "1+12" cities around the Bohai Sea, we will pay close attention to the excellent proportion of water quality in the coastal waters, the "deterioration" of rivers entering the sea, the investigation and rectification of sewage outlets entering the sea, and the coastal areas After three years of hard work, all the core objectives and tasks of comprehensive management of the Bohai Sea were completed with high quality, which initially curbed the deterioration trend of the ecological environment in the Bohai Sea and promoted the continuous improvement of the ecological environment quality in the Bohai Sea. In 2020, the proportion of excellent water quality (Grade I and II) in the coastal waters of Bohai Sea will reach 82.3%, which is 15.3 percentage points higher than that in 2017 before the implementation of the tough battle. ① The national control sections of 49 rivers entering the sea around Bohai Sea will be completely eliminated, and a total of 8,891 hectares of coastal wetlands and 132 kilometers of coastline will be rehabilitated.

Comprehensively carry out the tough battle of comprehensive management of key sea areas. Since 2021, on the basis of consolidating and deepening the achievements of the tough battle for comprehensive management of the Bohai Sea, China has expanded the tough battle to the Yangtze River Estuary-Hangzhou Bay and the adjacent waters of the Pearl River Estuary. As one of the landmark battles for deepening the tough battle for pollution prevention and control in the "Fourteenth Five-Year Plan", it has systematically deployed 8 coastal provinces (cities) and 24 coastal cities in the three key sea areas, adhered to precise pollution control, scientific pollution control and pollution control according to law, and thoroughly implemented comprehensive management, systematic management and source of land and sea planning. The overall water quality of key sea areas is improving. In 2023, the proportion of areas with excellent water quality (Class I and II) in the Bohai Sea, the Yangtze River Estuary-Hangzhou Bay and the Pearl River Estuary was 67.5%, an increase of 8.8 percentage points over 2020.

(2) Collaborative control of land-based pollution

Marine environmental problems are manifested in the sea and rooted on land. China has taken effective measures to promote the coordinated control of land-based pollution, control the key channels of pollutant transport to the ocean, and reduce the overall pressure of land-based pollution on the marine environment.

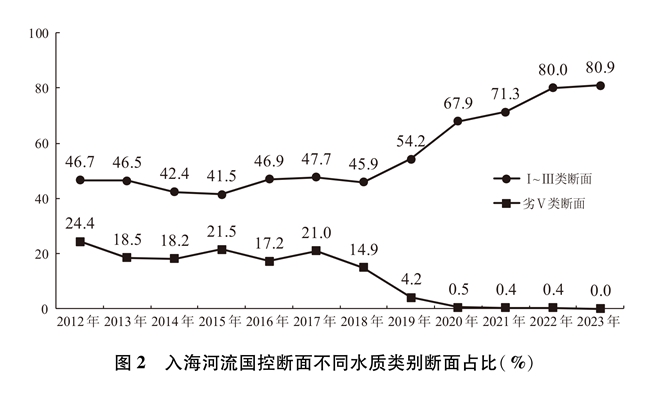

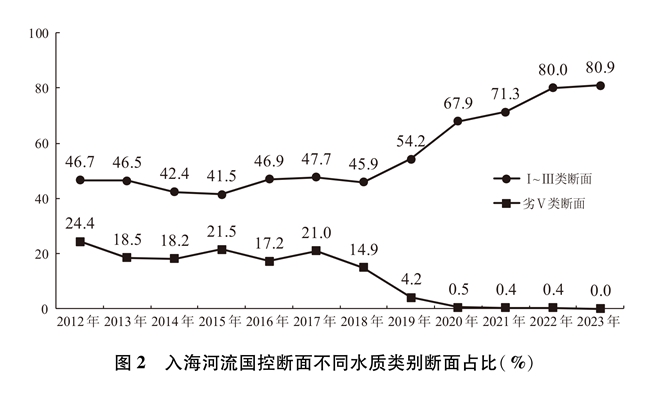

Do a good job in pollution prevention and control of rivers entering the sea. Rivers entering the sea are the most important way for land-based pollutants to enter the sea. China actively improves the quality and efficiency of urban sewage treatment, builds and transforms rain and sewage diversion pipe network, strengthens the supervision of sewage treatment industry, and reduces the impact of urban production and domestic sewage on the water quality of rivers entering the sea. Since 2012, the construction of sewage treatment infrastructure in coastal areas has been significantly accelerated, and the sewage treatment plants in cities above prefecture level have basically completed the upgrading of Grade A standard. Rural environmental improvement has been carried out. Since the "14th Five-Year Plan", 17,000 administrative villages have been newly completed in coastal provinces, and pollution prevention and control plans for livestock breeding in 170 large animal husbandry counties have been compiled. The treatment rate of domestic sewage in rural areas has exceeded 45%, greatly reducing agricultural and rural sewage discharge. Efforts will be made to solve the problem of water pollution and eutrophication in coastal waters with excessive nitrogen emissions in river basins, establish a comprehensive control system that integrates coastal areas, river basins and sea areas, explore the expansion of total nitrogen control scope to the upper reaches of rivers entering the sea, and promote the implementation of "one river and one policy" total nitrogen control in rivers entering the sea. From 2012 to 2017, the water quality of the national control section of the rivers entering the sea in China remained stable and improved, and the water quality improved greatly after 2018. At present, the number of sections with excellent water quality (Class I-III) in the state-controlled sections of rivers entering the sea accounts for about four-fifths of the whole, and the sections with no use function (Class V) are basically eliminated.

Hold the important gate of coastal pollution into the sea. The sewage outlet into the sea is an important node for coastal land-based pollution to be discharged into the sea. Issued the "Implementation Opinions on Strengthening the Supervision and Management of Sewage Discharges into the River and the Sea", promoted the investigation, monitoring, traceability and remediation of sewage outfalls into the sea as a whole, and established and improved the whole chain management system for coastal water bodies, sewage outfalls, sewage pipelines and pollution sources. In accordance with the requirements of "check everything, check everything", find out the quantity, distribution, discharge characteristics and responsible subjects of various sewage outlets into the sea, and promote the traceability rectification and responsibility implementation of sewage outlets into the sea. By the end of 2023, China has investigated more than 53,000 sewage outlets into the sea and completed the renovation of more than 16,000 sewage outlets into the sea, which has played an important role in improving the environmental quality of coastal waters. Build a unified information platform for sewage outlets into the sea, further standardize the setting and management of sewage outlets into the sea, and strictly prohibit the establishment of new industrial sewage outlets and urban sewage treatment plant sewage outlets in nature reserves, important fishery waters, beaches, ecological protection red lines and other areas.

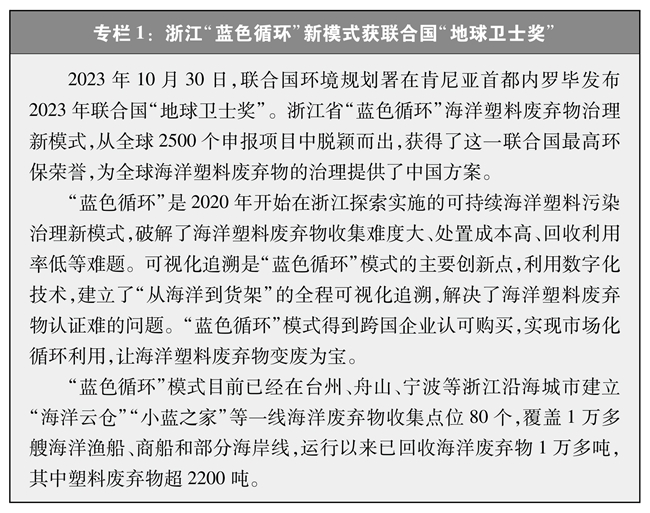

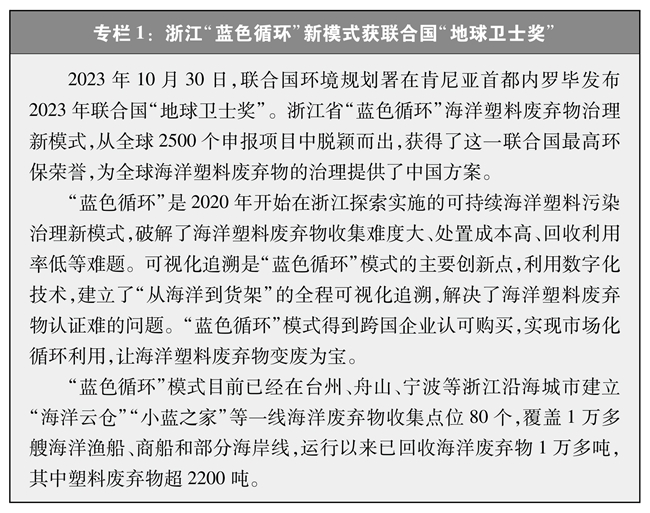

Clean up and rectify marine garbage. The Opinions on Further Strengthening the Control of Plastic Pollution and the Action Plan for the Control of Plastic Pollution in the 14th Five-Year Plan were issued to control garbage from the source. We will further establish and implement a system for monitoring, intercepting, collecting, salvaging, transporting and treating marine garbage. All coastal cities will regularly carry out prevention, control and rectification of garbage entering the sea in rivers and coastal waters in key sea areas through the system of "maritime sanitation". The new model of "blue cycle" marine plastic waste treatment in Zhejiang Province won the United Nations "Guardian of the Earth Award". We will promote the joint prevention and treatment of garbage in rivers, lakes and seas. In 2022, we will carry out special cleaning and drifting operations in 11 key bays such as Jiaozhou Bay, dispatching 188,100 people and cleaning up about 55,300 tons of all kinds of beach and sea drifting garbage. Consolidate and improve the effectiveness of special cleaning and bleaching work, and in 2024, upgrade the special cleaning and bleaching action in key bays to the marine garbage cleaning action in coastal cities. The monitoring survey of marine garbage and microplastics has been continuously organized. Compared with the results of similar international surveys in recent years, the average density of marine garbage and offshore microplastics in China coastal waters is at a low level.

(3) Precise prevention and control of marine pollution

Adhere to both development and protection, constantly strengthen the normal supervision of marine engineering, marine dumping, mariculture, maritime transportation and other industries, actively respond to sudden environmental pollution incidents, comprehensively improve the level of prevention and control of marine pollution, and strive to reduce the impact of various marine development and utilization activities on the marine ecological environment.

Strictly control the impact of marine engineering and marine dumping on the ecological environment. Continuously optimize the management of environmental impact assessment, start from the source, and strictly control marine engineering construction projects such as reclamation and sea sand mining. Strengthen the prevention and control of pollution in offshore oil and gas exploration and development, and the state will uniformly exercise the power of environmental impact assessment and approval and pollutant discharge supervision. Initiate the preparation of technical specifications for pollutant discharge permits for offshore projects, and promote the incorporation of offshore projects into the management of pollutant discharge permits according to law. In accordance with the principles of science, rationality, economy and safety, choose and set up dumping areas, scientifically and carefully evaluate the operation status of dumping areas, and ensure the safety of ecological environment and navigable water depth in dumping areas. Strict implementation of the dumping permit system, comprehensive use of automatic ship identification system, online monitoring of ocean dumping and other means to carry out off-site supervision, to minimize the impact of waste dumping on the ecological environment.

Systematic prevention and control of mariculture pollution. We will issue and implement Opinions on Accelerating the Green Development of Aquaculture and Opinions on Strengthening the Supervision of Marine Aquaculture Ecological Environment, formulate emission standards, strengthen the management of environmental assessment, promote the classified rectification of sewage outlets and tail water monitoring, and systematically strengthen the supervision of marine aquaculture environment. Coastal provinces (autonomous regions and municipalities) have actively introduced standards for the discharge of aquaculture tail water and strengthened the supervision of pollution discharge. Mariculture is included in the National Catalogue for Classified Management of Environmental Impact Assessment of Construction Projects, and environmental impact assessment management is implemented. In accordance with the requirements of "banning a batch, merging a batch and standardizing a batch", all localities have carried out clean-up and rectification of illegal and unreasonable aquaculture tail water outlets, promoted the upgrading and transformation of pond farming, industrial farming and cage environmental protection, and purified the breeding environment. Coastal provinces, cities and counties have issued tidal flat plans for aquaculture waters, and scientifically demarcated prohibited areas, restricted areas and aquaculture areas for marine aquaculture. Strengthen the prevention and control of pollution in ships and ports. Strictly implement the Control Standard for Discharge of Water Pollutants from Ships, organize special rectification activities to prevent and control water pollution from ships, and incorporate environmental protection standards into ship technical regulations. We will further promote the implementation of the joint supervision system for the transfer and disposal of water pollutants from ships, and coastal provinces (autonomous regions and municipalities) have basically completed the construction of facilities for receiving, transporting and disposing of pollutants from ships in ports. Continue to carry out supervision and inspection of ship fuel quality, strengthen supervision over the equipment and use of shore power facilities of berthing ships, and investigate and eliminate potential pollution hazards.

Establish an emergency system for marine environmental emergencies. We issued and implemented the National Emergency Response Plan for Major Oil Spills at Sea and the Emergency Response Plan for Oil Spill Pollution in Offshore Oil Exploration and Development, defined the emergency organization system, response process, information management release and safeguard measures, and established a relatively complete emergency response plan system for oil spill pollution at sea. Strengthen the investigation of marine environmental risks, and organize three provinces and one city around Bohai Sea to complete the risk assessment and environmental emergency plan filing of more than 5,400 key enterprises involved in hazardous chemicals, heavy metals and industrial wastes, and nuclear power. Develop the national marine ecological environment emergency command system, build an intelligent platform integrating communication, monitoring, decision-making, command and scheduling, and improve the information ability to deal with emergencies. The "oil fingerprint" identification system was developed, and more than 3,200 crude oil samples were collected, which basically realized the full coverage of oil sample collection on offshore oil exploration and development platforms, providing an important basis for solving the liability dispute of offshore oil spill accidents and carrying out oil spill pollution damage assessment.

(4) Strive to build a beautiful bay.

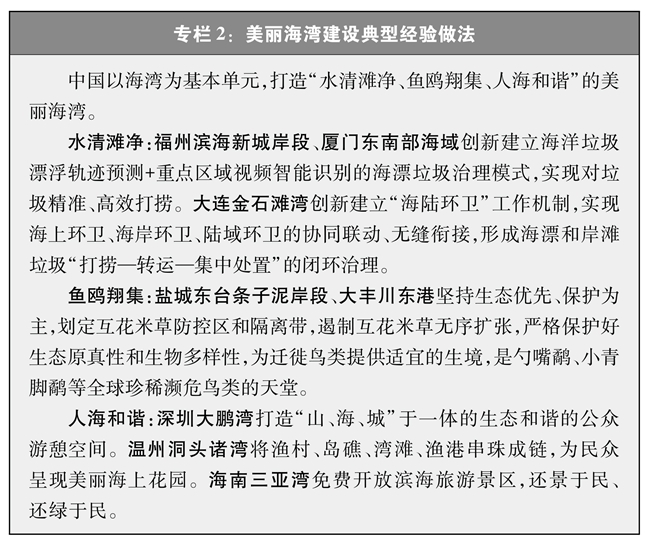

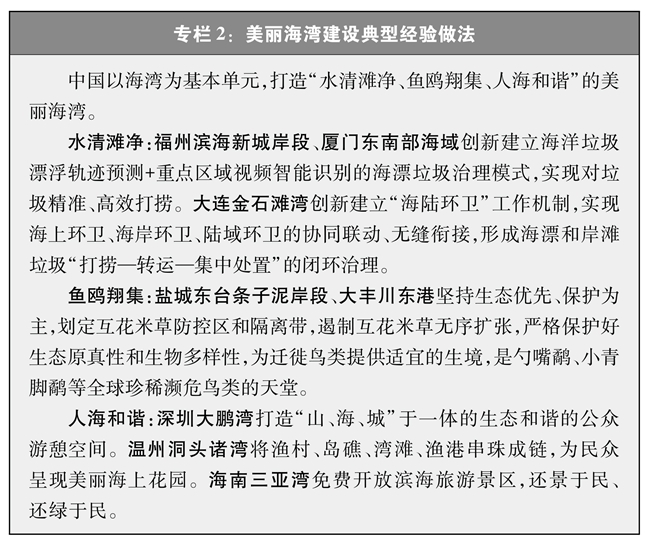

Bay is a key area to promote the continuous improvement of marine ecological environment. Taking the bay as the basic unit, with the construction goal of creating a beautiful bay with "clean water and clean beaches, fish and gulls gathering together, and harmonious human and sea", the "one bay and one policy" promotes pollution prevention and control in coastal waters, ecological protection and restoration, and beach environment improvement, and systematically improves the ecological environment quality of the bay.

Fully deploy the beautiful bay construction. The 14th Five-Year Plan for People’s Republic of China (PRC)’s National Economic and Social Development and the Outline of Long-term Goals in 2035 clearly require the promotion of the protection and construction of the beautiful bay. The Opinions on Comprehensively Promoting the Construction of the Beautiful China include the beautiful bay in the overall construction of the beautiful China, and clearly require that the completion rate of the beautiful bay will reach about 40% by 2027 and be basically completed by 2035. The 14th Five-Year Plan for Marine Ecological Environment Protection focuses on the main line of beautiful bay construction, divides the coastal waters into 283 bay construction units, and implements the key tasks, measures and objectives to each bay one by one. The Action Plan for Improving Beautiful Bay Construction further clarifies that by 2027, more than 110 beautiful bays will be promoted. At present, the construction of beautiful bays is progressing steadily. By the end of 2023, nearly 1,682 key tasks and engineering measures have been completed, and 475 kilometers of coastline and 16,700 hectares of coastal wetlands have been rehabilitated. The area ratio of excellent water quality in 167 bays exceeds 85%, and the area ratio of excellent water quality in 102 bays has increased compared with that in 2022.

Take measures to build a beautiful bay. Formulate basic standards for the construction of beautiful bays, set five types of indicators to guide the construction of beautiful bays and encourage the addition of characteristic indicators according to local conditions, with the guidance of good environmental quality, healthy marine ecosystem and harmonious coexistence between people and sea. Establish a beautiful bay construction management platform, track and evaluate the progress by means of on-site investigation and remote sensing monitoring, promote the intelligent supervision of the beautiful bay construction, and urge governments at all levels to carry out comprehensive management of the bay according to local conditions and implement the construction tasks. Establish a diversified investment and financing mechanism, strengthen government guidance, and encourage business entities and social capital to participate in the construction of beautiful bays. Comprehensive use of financial investment, special debt, eco-environment oriented development (EOD) projects and other financial and financial means to accelerate the landing of beautiful bay construction projects. Strengthen the demonstration and guidance of beautiful bay construction, encourage the institutional mechanism and key technology innovation of beautiful bay construction, carry out the selection of excellent cases, promote the demonstration experience model, and lead the improvement of the overall level of beautiful bay construction. At present, two batches of 20 national-level beautiful bay excellent cases have been selected.

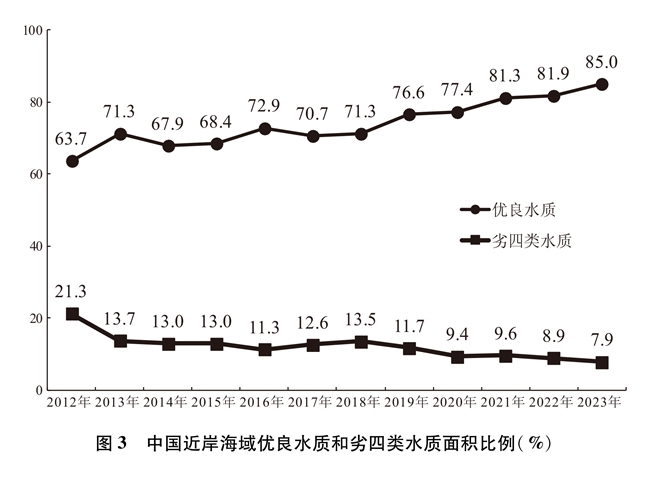

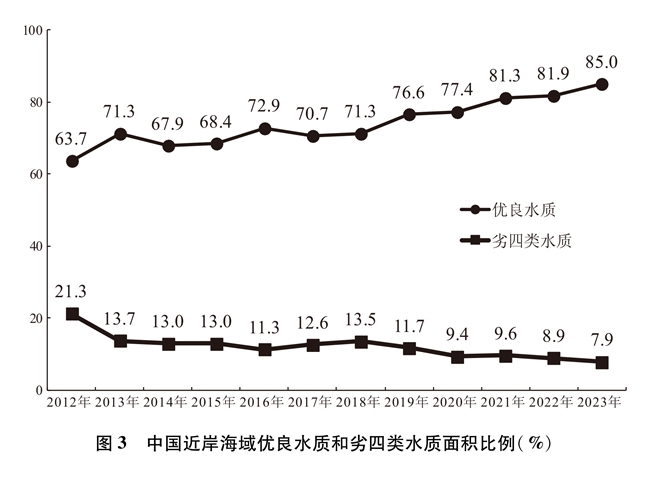

By further promoting the comprehensive management of key sea areas, the coordinated prevention and control of land and sea pollution, and continuously building beautiful bays, the water quality in the coastal waters of China has generally improved, and the proportion of excellent water quality in 2023 is 21.3 percentage points higher than that in 2012.

Fourth, scientifically carry out marine ecological protection and restoration

China insists on respecting nature, adapting to nature and protecting nature, making overall plans to promote the integrated protection and systematic restoration of marine ecology, making scientific decisions and making precise policies, keeping the ecological security boundary firmly, and constantly improving the diversity, stability and sustainability of marine ecosystems.

(1) Building a solid marine ecological barrier

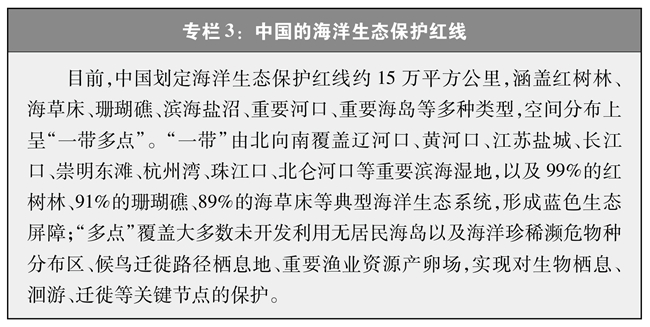

China took the lead in putting forward and implementing the red line system of ecological protection in the world, and effectively built the marine ecological protection barrier by various means, leaving enough time and space for the ocean to recuperate.

Establish a marine ecological classification and zoning system. Marine ecological classification and zoning is the basic model of modern marine management. Since 2019, the establishment of marine ecological classification and zoning system has been carried out, and a framework of "two beams and four pillars" has been constructed, and marine ecological classification has been carried out based on biogeography and aquatic scenes and four components of water body, topography, sediment and biology; The marine ecological zoning of different scales is carried out by nesting from top to bottom, and the offshore area of China is divided into 3 ecological primary zones, 22 ecological secondary zones and 53 ecological tertiary zones. In 2023, focusing on the coastal waters with the most frequent human activities, 20 ecological third-level zones in coastal waters were divided into 132 ecological fourth-level zones. By constructing a unified ecological classification standard and dividing ecological zones of different scales, the marine natural geographical pattern of China can be scientifically reflected, which provides basic support for comprehensively understanding the marine ecological background, finely carrying out marine ecological evaluation and protection and restoration.

Carry out the evaluation of marine resources and environmental carrying capacity and land space suitability. In 2015, the "Overall Plan for the Reform of Ecological Civilization System" made requirements for the evaluation of the carrying capacity of resources and environment for the first time, and began to evaluate the scale that the natural resources and ecological environment can carry. In 2019, the "Several Opinions on Establishing a Land Spatial Planning System and Supervising its Implementation" was issued, proposing that on the basis of the evaluation of the carrying capacity of resources and environment and the suitability of land spatial development, various functional spaces should be scientifically and orderly laid out. China began to build a technical method system for evaluating the carrying capacity of resources and environment and the suitability of land space development, and organized and completed the evaluation of the carrying capacity of marine resources and environment and the suitability of land space development at national, regional, provincial and municipal levels, as a scientific basis for delineating the red line of marine ecological protection, marine ecological space and marine development and utilization space.

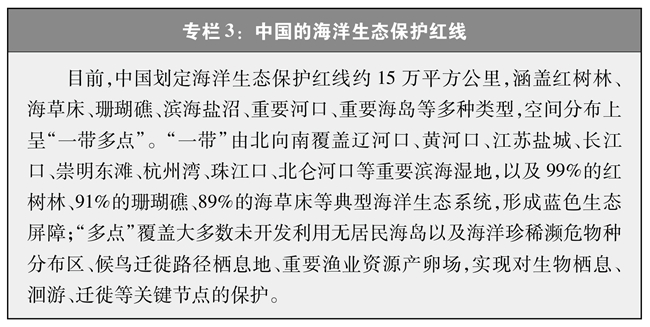

Delineate and strictly observe the red line of marine ecological protection. The red line of ecological protection is an important institutional innovation and major decision-making arrangement for the construction of ecological civilization in China. China has made systematic arrangements for key areas of marine ecological protection, giving priority to areas with extremely important ecological functions, such as biodiversity conservation and coastal protection, and areas with extremely fragile ecology, such as coastal erosion, which are strictly protected in the red line of marine ecological protection, with a "one belt and many points" distribution. At the same time, a series of documents were issued to standardize the limited human activities allowed in the red line of ecological protection and clarify the control requirements. We will continue to monitor the red line of ecological protection, evaluate the effectiveness of protection, survey and define targets, rationally optimize the spatial layout of the red line, improve the long-term management and control mechanism of the red line of ecological protection, realize a red line to control important ecological space, and firmly hold the bottom line of national ecological security.

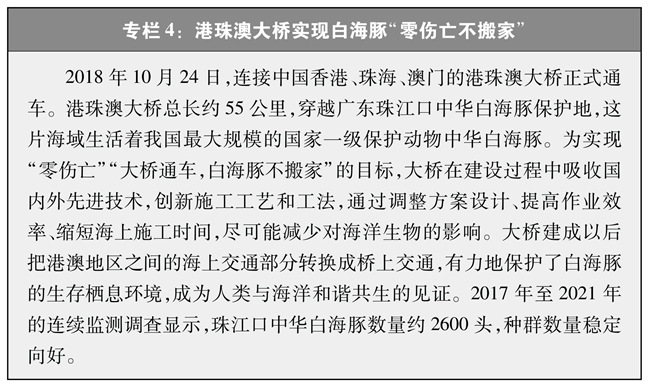

Improve the system of marine protected areas. China has included important marine ecosystems, natural concentrated distribution areas of rare and endangered marine life, concentrated distribution areas of marine natural relics and natural landscapes into marine protected areas for key protection. After years of development, China has established 352 sea-related nature reserves, protected sea areas of about 93,300 square kilometers, and prepared five candidate areas for sea-related national parks, covering rare and endangered marine organisms such as spotted seals and Chinese white dolphins, typical ecosystems such as mangroves and coral reefs, as well as landforms such as ancient shell dikes and underwater ancient forest relics, and initially formed a marine protected area system with complete types, reasonable layout and sound functions. Through the construction of marine protected areas, the population of rare marine organisms is gradually recovering, and the number of spotted seals, a national first-class protected animal, wintering in Liaodong Bay is stable at more than 2,000 each year.

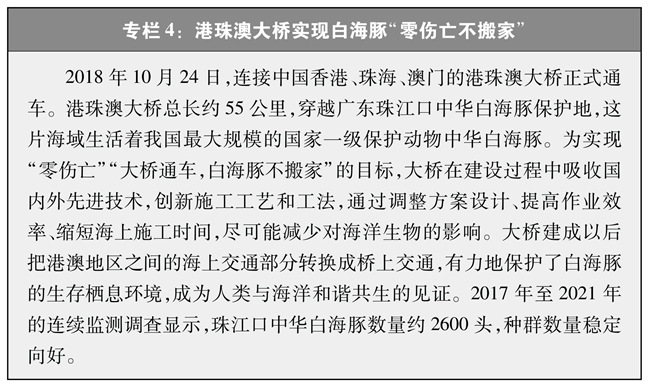

Conservation of marine biodiversity. By protecting ecological corridors, improving the level of species protection, carrying out scientific research and monitoring, suspending fishing in key sea areas, and increasing and releasing, marine life is actively and effectively protected. At present, more than 28,000 species of marine life have been recorded in China, accounting for about 11% of the recorded species of marine life in the world. About 140,000 copies of various biological resources were collected and preserved by the National Marine Fishery Biological Germplasm Resource Bank, and the collection and preservation of biological genetic resources continued to accelerate. Proliferation and release are carried out in offshore waters, and about 30 billion species of aquatic organisms are released every year. For the key protected species, Chinese white dolphins, turtles, corals and spotted seals, a special national protection action plan or outline was issued, and a national-level species protection alliance was established to carry out fruitful work, and the population was stable and improved. Twenty coastal wetlands, such as Dalian Spotted Seal National Nature Reserve in Liaoning Province and Huidong Harbor Turtle National Nature Reserve in Guangdong Province, have been included in the list of internationally important wetlands.

(2) Implementing marine ecological restoration.

Adhere to natural restoration, supplemented by artificial restoration, and carry out major marine ecological restoration projects in an orderly manner, initially forming a marine ecological restoration pattern with planning guidance, institutional guarantee, financial support and basic support from the top of the mountain to the ocean, and planting a beautiful marine ecological foundation in China.

Adhere to the problem-oriented comprehensive policy. Considering the marine ecosystem as a whole, we should accurately diagnose the marine ecological problems, reasonably determine the objectives and tasks of protection and restoration, adopt the modes of protection and conservation, natural restoration, auxiliary regeneration, ecological reconstruction and so on, optimize the restoration measures and technologies, adapt to the local conditions and time, and make policies by region and classification. For example, in the layout of protection and restoration, the Bohai Sea focuses on warm temperate estuarine wetlands, the Yellow Sea focuses on warm temperate coastal wetlands, the East China Sea focuses on subtropical estuaries, bays and islands, and the South China Sea focuses on subtropical and tropical typical coastal wetlands.

Science and technology support standards first. Strengthen the research on the succession law and internal mechanism of marine ecosystem, carry out technical research, build standards and norms, and improve the integrity, scientificity and operability of ecological restoration. Select the first batch of 10 lists of innovative and applicable technologies for marine ecological restoration. We issued the Technical Guide for Marine Ecological Restoration and 11 series technical guidelines for coastal ecological disaster reduction and restoration, formulated technical manuals for the restoration of various typical marine ecosystems such as mangroves, coastal salt marshes and oyster reefs, and formed a systematic technical standard system for restoration.

Strengthen financial support for restoration. Since 2016, the central government has set up special funds to support coastal provinces (autonomous regions and municipalities) to carry out marine ecological protection and restoration projects, mainly in key areas such as sea areas, islands and coastal zones, which have an important role in ensuring ecological security and have a wide range of ecological benefits. The Opinions on Encouraging and Supporting Social Capital to Participate in Ecological Protection and Restoration was issued to encourage and support social capital to participate in the whole process of investment, design, restoration and management of marine ecological protection and restoration projects, and to promote the establishment of a market-oriented investment and financing mechanism for social capital to participate in marine ecological protection and restoration. We will introduce an incentive policy to give mangrove afforestation qualified new construction land indicators.

Implement major marine ecological protection and restoration projects. From 2016 to 2023, the central government supported coastal cities to implement 175 major marine ecological protection and restoration projects, including the "Blue Bay" rectification campaign, the ecological restoration of the Bohai Sea, the coastal protection and restoration project, and the mangrove protection and restoration, covering 11 coastal provinces (autonomous regions and municipalities). The central government invested a total of 25.258 billion yuan, which led to the nationwide cumulative renovation and restoration of nearly 1,680 kilometers of coastline and more than 750,000 mu of coastal wetlands. The Special Action Plan for Mangrove Protection and Restoration (2020-2025) was issued. By the end of 2023, about 7,000 hectares of mangroves had been built and 5,600 hectares of existing mangroves had been restored. The results of the land change survey in 2022 show that the mangrove area in China has increased to 29,200 hectares, an increase of about 7,200 hectares compared with the beginning of this century. China is one of the few countries in the world with a net increase in mangrove area. Through the above efforts, the service function of marine ecosystem has been continuously enhanced, the capacity of marine carbon sink has been enhanced, and the ecological security barrier of coastal zone has been built. China is promoting high-quality development with high-level marine ecological protection and restoration.

(3) Strictly observe the marine disaster defense line.

Marine disasters pose a serious threat to marine ecosystem. By enhancing the resilience of the coastal ecosystem, strengthening the risk identification and emergency response of marine ecological disasters, the ability to prevent and control marine disasters will be continuously improved, and the bottom line of marine ecological security will be effectively maintained.

Strengthen the ability of coastal ecosystem to resist typhoon, storm surge and other marine disasters. China is one of the countries with the most serious marine disasters in the world. In order to prevent major marine disasters, a global marine stereoscopic observation network with reasonable layout, complete functions and complete system will be built, and long-term operational observation of the sea areas under the jurisdiction of China and the key sea areas will be basically realized, and the level of autonomy, globalization, intelligence and refinement of marine disaster early warning will be continuously improved, providing technical support for marine disaster prevention and response. Mangroves, coastal salt marshes and other ecosystems are natural defense lines against marine disasters. By building ecological seawalls and building a comprehensive protection system with synergy between ecology and disaster reduction, we can give full play to the disaster prevention and mitigation functions of ecosystems and comprehensively strengthen the coastal ecosystem’s ability to resist marine disasters such as typhoons and storm surges.

Enhance the ability to prevent and control marine ecological disasters. Marine ecological disasters have a serious impact on the economic and social development of coastal areas. The marine ecological disasters in China are mainly local biological outbreaks such as red tide and green tide of Enteromorpha prolifera. Formulate emergency plans for red tide disasters, strengthen early warning and monitoring of red tide disasters, timely discover, track and accurately warn red tide disasters, grasp the development and evolution trend of red tide, and provide support for prevention and control of red tide disasters and emergency response. Monitoring, early warning, prevention and control of Enteromorpha prolifera green tide disaster in the Yellow Sea will be carried out to reduce the impact of Enteromorpha prolifera green tide disaster. In view of the local biological outbreaks such as jellyfish and shrimp, we will implement monitoring in key areas and time periods and release information in a timely manner.

(four) to carry out the demonstration of the establishment of the island of the United States.

Island is an important platform for protecting marine environment and maintaining ecological balance. The demonstration work for the establishment of Hemei Island takes a single island or group of islands as the main body of creation, with the goal of creating a new pattern of harmony between people and islands with green islands, clean beaches, clear water and abundant materials, which effectively promotes the high-level protection and high-quality development of island areas.

There are many highlights in creating demonstrations. In 2022, the demonstration of the establishment of Hemei Island was officially launched. Focusing on the connotation of Hemei Island, which is "ecological beauty, living beauty and production beauty", 36 indicators were set in seven aspects, including ecological protection and restoration, resource conservation and intensive utilization, improvement of living environment, green and low-carbon development, characteristic economic development, cultural construction and system construction, to guide the island area to carry out the demonstration. In 2023, the first batch of 33 islands were selected as American islands.

Ecology leads to create a demonstration. Adhere to ecological priority, repair and restore the island’s ecological environment, implement ecological protection and restoration projects such as shorelines, islands and aquatic plants, and encourage the development of carbon fixation and exchange enhancement in blue carbon ecosystems such as mangroves and seaweed beds. For example, Long Island, Shandong Province will build an international zero-carbon island, actively explore ways to turn marine carbon sink resources into assets, and issue "marine carbon sink loans" and "seaweed beds and seaweed fields carbon sink loans". We will continue to improve the island’s living environment, strengthen infrastructure construction, improve external traffic conditions, and improve the construction of water supply and drainage, power supply, communications and other facilities. For example, Guangdong Dong ‘ao Island will implement large-scale planting of flowers and arbor irrigation, build a green road that runs through the island and has beautiful scenery, and build a mountain and sea plank road on an offshore island. Promote the new development of the integration of literature and tourism, make use of the characteristic resources of islands, seas, history and temples, deepen the "tourism+"model, make great efforts to promote "tourism+fishery", "tourism+village" and "tourism+culture", innovate the industrial model of cultural and sports tourism, excavate marine stories and inherit traditional culture. For example, Meizhou Island in Fujian Province has set up 33 intangible cultural heritage projects to spread Mazu culture in various forms.

(5) Building an ecological coastal zone

The coastal zone is a special area where land and sea are highly related, interactive and integrated, sharing weal and woe, with abundant natural resources, unique environmental conditions and frequent human activities. China’s coastal zone, as the intersection of coastal areas and oceans, is the key zone to build a national ecological security barrier, support coastal economic and social development, bear the linkage between land and sea, promote high-level development and opening up, and promote high-quality development. In 2021, China proposed to build an eco-coastal zone, adhere to the overall planning of land and sea, build an eco-coastal zone evaluation technology and method system with the comprehensive evaluation of marine ecological conditions as the starting point, set nine evaluation indicators in four aspects: ecosystem stability, environmental quality, sustainable utilization of resources and human safety and health, scientifically identify ecological problems in the coastal zone, and build a healthy, clean, safe and diverse coastal zone through measures such as ecological protection and restoration, building a coastal greenway network and upgrading ecological seawalls.

Five, strengthen the supervision and management of marine ecological environment

Coordinate resources in all fields, gather all forces, adhere to the red line of ecological protection, the bottom line of environmental quality and the online utilization of resources, lay a good combination of zoning management, monitoring and investigation, supervision and law enforcement, and assessment inspectors, improve the informationization, digitalization and intelligence of marine ecological environment supervision and management, and ensure the smooth progress of marine ecological environment management and marine ecological protection and restoration.

(a) the implementation of space use control and environmental zoning control.

We will fully implement the strategy of the main functional areas, implement use control according to the national spatial planning, strengthen the zoning control of the ecological environment in coastal waters, and draw a border for the development of "clear bottom line".

Implement control over the use of marine space. In 1990s, China promulgated and implemented the national marine functional zoning according to the location, resources and environmental conditions of the sea area, and defined the leading functions of the functional zones and the requirements for marine environmental protection. In 2015, the National Plan for Major Marine Functional Zones was issued, which divided the marine space into four categories: optimized development, key development, restricted development and prohibited development, and made basic constraints on the development and protection orientation of each marine area. Since 2019, marine functional zoning and marine main functional area planning will be integrated into the national spatial planning to achieve "multi-regulation integration". In October 2022, the Outline of National Land Spatial Planning (2021-2035) was issued and implemented. In the implementation and management of land spatial planning, coastal provinces implemented the requirements of the Outline, made detailed arrangements for marine land space, scientifically divided ecological protection areas, ecological control areas and marine development areas, made clear the functional uses, sea use methods and ecological protection and restoration requirements of each functional area, and gradually established "sea areas and islands"

Implement zoning control of ecological environment in coastal waters. Linking up the national economic and social development planning and the national land space planning, aiming at ensuring the ecological function and improving the environmental quality of the coastal waters, focusing on implementing the red line of ecological protection, the bottom line of environmental quality and the hard constraint of resource utilization, taking the coastal waters environmental control unit as the basis and taking the ecological environment access list as the means to promote the accurate control of the coastal waters ecological environment in different regions. Since 2017, coastal areas have gradually carried out the exploration and practice of ecological environment management and control in coastal waters, and delineated 3036 environmental management and control units in coastal waters to promote the combination of industrial development and environmental carrying capacity. Xiamen City pioneered the application system of ecological environment zoning management and control in China, which effectively solved the difficulties and pains such as difficult site selection for enterprises, long time limit for examination and approval, and slow landing of projects. It divided 42 coastal waters into environmental management and control units, improved the overall management level of land and sea, and promoted the transformation and upgrading of coastal industries. In 2024, the Opinions on Strengthening the Zoning Control of Ecological Environment was issued, which called for strengthening the zoning control of ecological environment in coastal waters, and proposed to form a set of global coverage, accurate and scientific zoning control system for marine ecological environment, and systematically deploy the zoning control of ecological environment to provide important follow-up for scientific guidance of various development, protection and construction activities in coastal waters.

(two) to carry out monitoring and investigation.

Marine ecological environment monitoring and investigation is the basis of marine ecological environment protection. China has gradually improved the ecological environment monitoring network integrating the sky, the earth and the sea, strengthened the monitoring, evaluation and early warning of marine ecological quality, and found out the base number, so as to provide decision-making basis for the supervision and management of marine ecological environment.

Comprehensively carry out marine ecological environment monitoring. Continuously optimize and improve the layout of marine ecological environment monitoring network, focus on coastal waters, cover jurisdictional waters, and build a modern marine ecological environment monitoring system with land and sea as a whole and river and sea as a whole. Integrate national and local resources, build a national marine ecological environment monitoring base, and build a national ecological quality comprehensive monitoring station. Based on 1359 state-controlled monitoring sites of seawater quality, it covers 15 monitoring tasks in four categories: marine environmental quality monitoring, marine ecological monitoring, special monitoring and marine supervision and monitoring, and continuously enhances the monitoring capabilities of emerging hot spots such as marine garbage, marine microplastics, marine radioactivity, new marine pollutants and marine carbon sources and sinks, strengthens the monitoring of the health status of typical ecosystems such as mangroves, gradually establishes a unified platform for the transmission and sharing of marine ecological environment monitoring data, and regularly publishes the monitoring data of seawater quality.

Coordinate the promotion of marine ecological early warning and monitoring. With the goal of "being clear about the distribution pattern of marine ecosystems, the current situation and evolution trend of typical ecosystems, and the major ecological problems and risks", we will build an operational ecological early warning and monitoring system focusing on coastal waters, covering China’s jurisdictional waters, radiating polar regions and deep-sea key areas. In the coastal waters, focus on the typical ecosystem distribution areas such as important estuaries, bays, coral reefs, mangroves, seagrass beds and salt marshes, and carry out investigation and monitoring in areas with high risk of ecological disasters; In the sea areas under jurisdiction, analyze and evaluate ecological problems such as sea level change, seawater acidification and hypoxia, realize full coverage monitoring of major marine ecosystem types, and expand ecological monitoring in polar regions and deep seas. During the "Tenth Five-Year Plan" period, there were more than 1,600 monitoring stations for coastal ecological trends, and the national ecological status survey of coral reefs, coastal salt marshes and seagrass beds and the general survey of estuaries and seaweed farms were completed. Compile and issue the Bulletin on Marine Ecological Early Warning and Monitoring in China. Explore the establishment of typical marine ecosystem early warning methods, and basically realize the operational operation of coral reef bleaching early warning.

Conduct a baseline survey of marine pollution. In order to systematically grasp the basic situation of marine ecological environment, China has carried out three baseline surveys of marine pollution in 1976, 1996 and 2023 to find out the basic situation of marine ecological environment in each period. The third baseline survey of marine pollution covers four aspects: the survey of marine environmental pollutants, the survey of pollution sources entering the sea, the survey of coastal environmental pressure and ecological impact, and the detailed survey of the bay. The basic data of marine ecological environment are obtained, which provides decision support for scientifically evaluating the marine ecological environment in China and formulating and implementing the strategic policy of marine ecological environment protection in China.

(3) Strict supervision and law enforcement

Adhere to the coordination of supervision and law enforcement, departmental cooperation, and linkage between the central and local governments, build a three-dimensional and full-coverage marine supervision and law enforcement network, and severely investigate and deal with illegal activities of using sea islands and destroying the marine ecological environment.

Comprehensive maritime supervision continued to be optimized. We will continue to improve the comprehensive supervision ability of coastal islands in the sea area, speed up the construction of a supervision system in the whole chain and all fields before and after the event, and give play to the role of comprehensive supervision in maintaining the order of sea islands, strictly observing the bottom line of resource safety, urging ecological sea islands, and supporting high-quality development. At present, China has built and operated various systems, such as sea island supervision system, marine ecological restoration supervision system, and "one map" information system for land and space planning, and adopted the complementary mode of satellite remote sensing, sea and shore-based to grasp the use of sea areas, the changes of sea island space resources and the ecological environment. Comprehensive use of remote sensing monitoring, maritime and shoreline inspections and other means, the implementation of high-frequency supervision of sea areas, islands and coastlines, focusing on sea activities such as reclamation, ecological restoration projects, drilling platforms, submarine optical cables, cross-sea bridges, and important areas such as sea sand resource-rich areas, marine oil and gas exploration and development zones, marine dumping areas, aquaculture and fishery areas, to curb illegal activities in the field of marine ecological environment in the bud, and continuously improve the effectiveness of maritime supervision and law enforcement.

Comprehensive enforcement of marine environmental protection continued to strengthen. In recent years, comprehensive law enforcement has been carried out in the waters under the jurisdiction of China. Conduct regular law enforcement inspections on marine engineering projects, marine nature reserves, fisheries, maritime transportation, etc. Implement the special law enforcement of "Haidun" to strengthen the coastline protection and control of reclamation, carry out the supervision of "Green Shield" nature reserve, carry out the special law enforcement of "Bihai" to severely crack down on illegal acts that damage the marine ecological environment, and carry out the special law enforcement of "Lan Jian" and "China Fishery Administration Bright Sword" to strengthen the protection of fishery resources, which has formed a strong shock to the illegal acts related to the marine ecological environment. From 2020 to 2022, more than 19,000 inspections were conducted on marine projects, oil platforms, islands and dumping areas, and more than 360 cases of illegal reclamation, illegal dumping and destruction of islands were investigated, and illegal and criminal activities in key areas of marine ecological environmental protection were severely cracked down.

(D) to strengthen the assessment of inspectors

Implementing the target responsibility system and evaluation system for marine environmental protection, and carrying out the central ecological environmental protection inspector and the national natural resources inspector are important measures to solve the outstanding problems of marine ecological environment, compact local responsibilities and encourage cadres to take responsibility.

Implement the target responsibility system and evaluation system for marine environmental protection. In 2014, the Environmental Protection Law was revised, and the environmental protection target responsibility system and evaluation system were implemented. In 2015, the water pollution prevention and control action plan included the core task indicators such as the proportion of excellent water quality in coastal waters into the target responsibility assessment system of coastal local governments. In 2020, the water quality of coastal waters will be included in the effectiveness evaluation system of pollution prevention and control, and the water quality requirements of coastal waters will be improved year by year. In 2023, in the revised Marine Environmental Protection Law, it was made clear that the local people’s governments at or above the county level in the coastal areas were responsible for the marine environmental quality of the sea areas under their management. As an important basis for leading bodies and leading cadres at all levels to reward and punish, promote and use, the assessment results have an important guiding role in compacting the responsibilities of coastal local governments and encouraging cadres to take responsibility. Zhejiang has established a comprehensive evaluation system for marine ecology, and the evaluation results have been incorporated into the evaluation systems for the construction of "Five Rivers Governing Together" and "Beautiful Zhejiang", effectively stimulating the entrepreneurial enthusiasm of leading cadres and officials.